Lessons from the First MCP Hackathon in NYC: What Our 3rd Place Vuln Analyst Agent Revealed about Enterprise Security

Why our impressive, award-winning AI agent is completely unusable in a real enterprise.

This past weekend, our team was incredibly proud to have won 3rd place at NYC's first-ever Model Context Protocol (MCP) Hackathon, organized by

and hosted by at the Automattic offices, with documenting. It was a whirlwind of coding, creativity, and collaboration. I want to give a huge shout-out to my teammates and fellow builders, Darsh Shah and Khizar Anjum, for their amazing work and what we achieved in such a short time.We built a CVE Threat Assessment Agent using MCP that is designed to automate the complex, manual process of security triage. The project was a blast to build, and you can check out the full repository on GitHub.

The experience was a powerful lesson. Building a functional, impressive agent is now easier than ever. But the journey from a cool demo to an agent that's truly enterprise-ready reveals a critical new set of challenges around security, compliance, and governance. This post is about that gap—and what we as builders need to do to cross it.

The Analyst's Dilemma: Automating a High-Stakes Workflow

Every security analyst knows the feeling. You log in on Monday morning to a dashboard flooded with new CVE alerts all flashing red. The business is asking, "Are we safe?" and your job is to find the signal in the noise. The tools we use to identify and patch vulnerabilities aren’t keeping up with the pace of threats being introduced into software–more of which is going to be created quickly through vibe coding.

The vulnerability triage process boils down to two critical, time-consuming questions:

“Are we even affected by this?”

"If we are, is this vulnerability actually exploitable in the wild and should we care?"

Answering these questions seemed like the perfect job for a specialized CVE Threat Assessment Agent.

Our Solution: Deconstructing the Agent's Multi-Stage Trajectory

We gave our agent a simple goal and a set of tools accessible via an MCP server we created. The agent then autonomously used those tools to execute a sophisticated, multi-stage workflow, or “trajectory,” to achieve its goal.

Phase 1: Analysis & Triage

Step 1: CVE Validation: The agent’s first action was to use MCP to call the get_cve_from_nist tool to fetch the official CVSS score and description from NIST, validating the threat.

Step 2: Business Impact Analysis: It then immediately pivoted to an internal assets resource that listed our deployed software to determine if our company was affected. This step is crucial; it calculates a “User Threat Level” based on asset criticality and environment, answering the "do we care?" question first.

Step 3: Active Exploitation Assessment: If an asset was affected, the agent queried the CISA KEV catalog using the search_kev tool to check for active exploitation.

Step 4: Proof-of-Concept Intelligence: Finally, it used a chain of GitHub tools we created via MCP to find repositories, list files, and extract real code snippets from public exploits.

Phase 2: Synthesis & Delivery

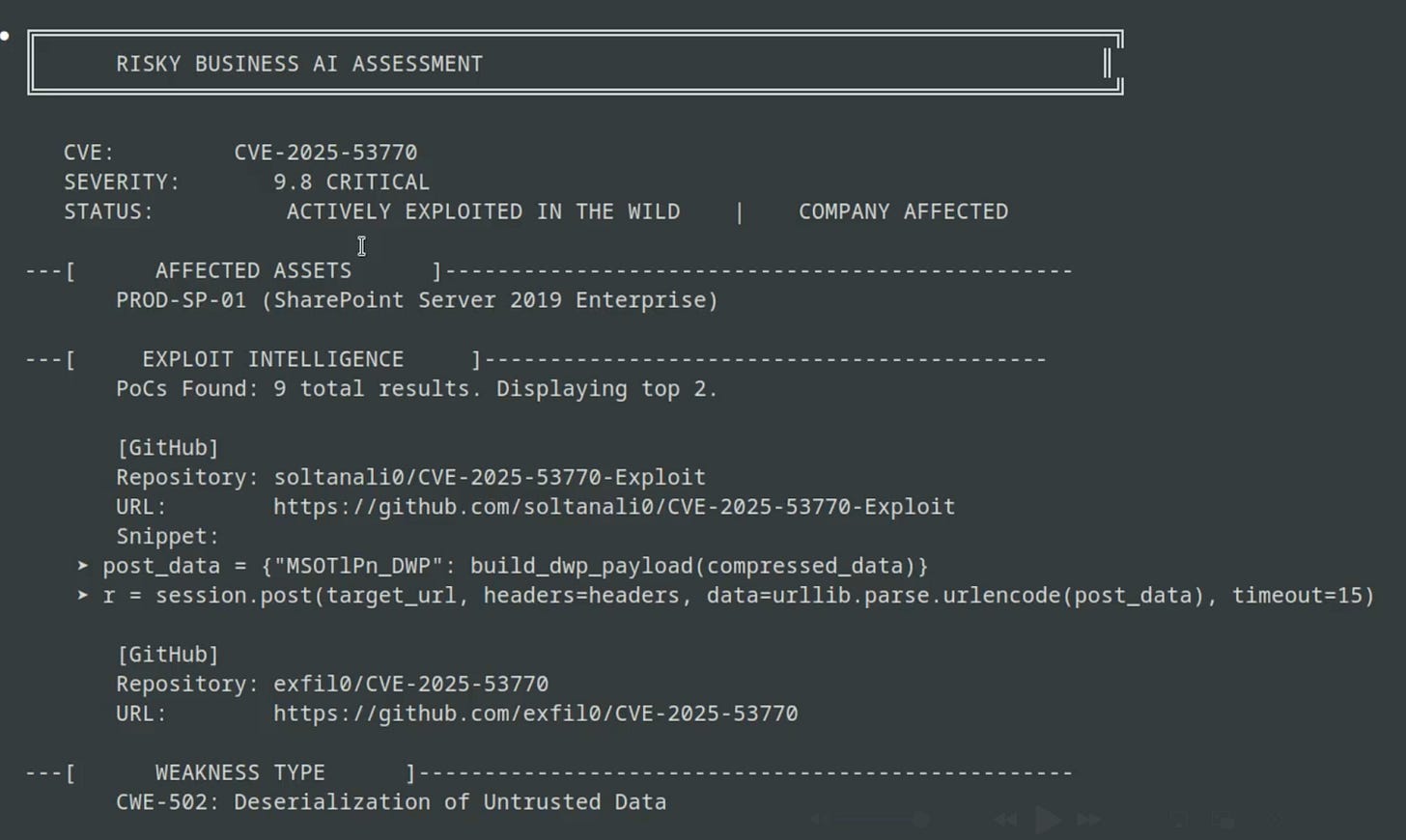

Step 5: Generate the “ThreatCard" Dashboard: The agent synthesized all of its findings into a clear, text-based dashboard for the analyst.

Step 6: Deliver the "AI Analyst Briefing": It then generated a spoken summary of its findings using ElevenLabs for a quick, C-level briefing.

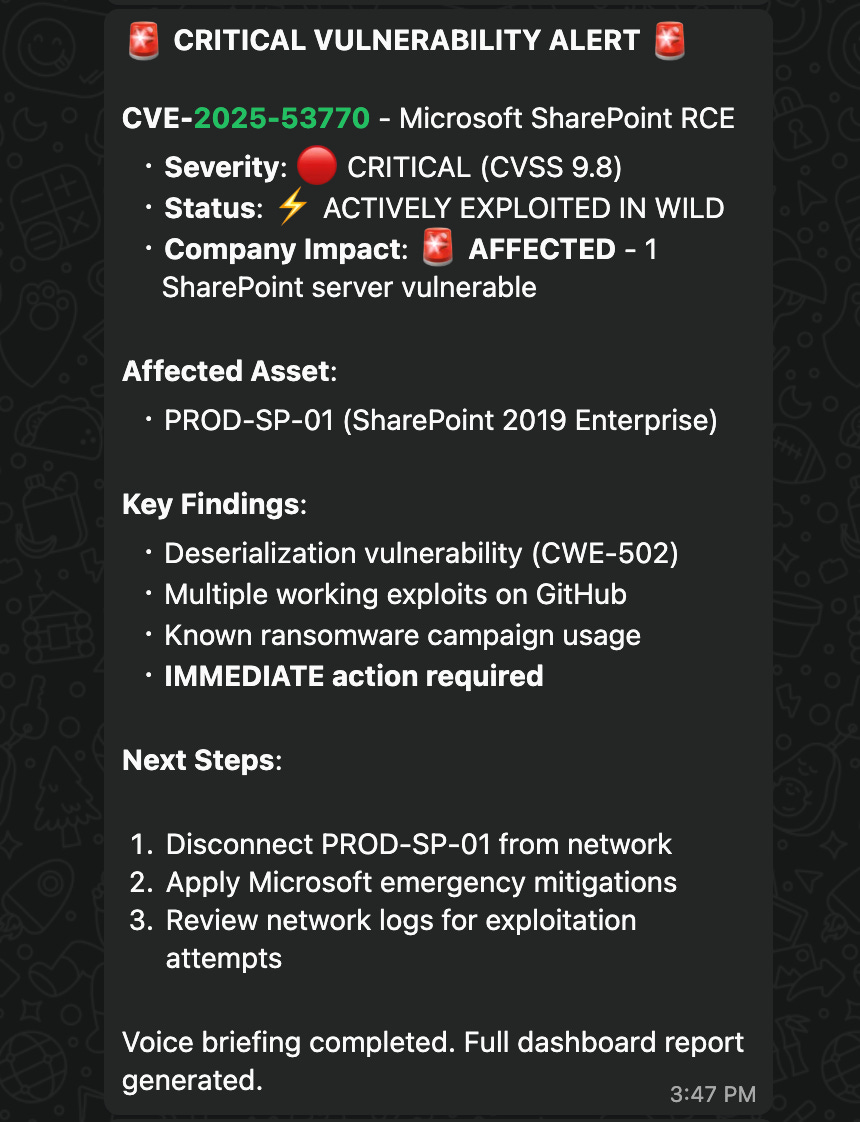

Step 7: Send the P1 Alert: The final step was pure action—sending a high-priority alert with all the key details to the on-call pager through Beeper, which has just released its own MCP server and announced at the hackathon.

In under a minute, the agent successfully compressed what could have been hours of manual human work into a single, automated trajectory.

The agent synthesized all of its findings into a clear, text-based dashboard for the analyst, giving them an instant verdict on the vulnerability at a glance:

We then generated an audio briefing using ElevenLabs for busy CISOs who need up-to-date information and can listen to what’s happening on their phones.

The final step was moving to remediation—sending a high-priority alert with all the key details and next steps to the on-call pager through Beeper:

You can see the full demo, including the audio briefing, in action here:

The Revelation: From a Winning Demo to an Enterprise-Ready Agent

Our demo was a success, and we were super proud to win 3rd place at the hackathon. You can watch our final pitch here:

But when we put on our enterprise security hats, our CVE Threat Assessment Agent revealed a series of critical governance gaps that we hadn't built solutions for.

If we tried to implement this agent in the enterprise, a CISO or GRC officer would immediately ask a series of hard questions that our impressive demo couldn't answer.

Control: What stops a bug from causing our agent to misread the asset list and ignore a critical threat?

Audit: How can we prove to an auditor that the agent’s “User Threat Level” calculation was correct and tamper-proof?

Security: What prevents an attacker from creating a malicious GitHub repo that our get_github_file_content tool downloads, compromising the agent itself?

Deconstructing the Revealed Risks

By building our agent, we inadvertently created a perfect model of the new risks that builders must now confront.

The “Prompt-as-Policy” Fallacy: Our SYSTEM_PROMPT.md includes "Key Behavioral Rules" like "Always use the MCP server tools" that we gave it. For a hackathon, this is fine. For an enterprise, this is not governance. A prompt is a suggestion, not a deterministic control. It's an attempt to enforce policy through hope, which is not a security strategy.

The Tool Misuse Risk: Our agent has powerful tools with real-world consequences, like the Beeper alert. Without controls, we can't enforce the Principle of Least Privilege on the tool itself. What stops the agent from sending 1,000 alerts instead of one? What if the agent is compromised and the alert tells the on-call team to do something destructive?

The Flawed Logic Risk: Our agent calculates a “User Threat Level” based on asset criticality. But what if there's a bug in that logic? In an enterprise, you can't have a black box making critical risk calculations without an independent system to verify its reasoning and enforce constraints.

Conclusion: The Next Challenge for Agent Builders

The first wave of agent development was about proving capability—showing the world the incredible, complex tasks that agents can perform. Our hackathon project is a testament to how far that wave has come.

But the next, more important wave is about proving trustworthiness.

As builders, the challenge is no longer just “what can my agent do?” but rather, “how can my customers control and verify what my agent does?” We need to shift our focus from simply building capabilities to building them with the provable security and governance that enterprises demand. Answering these hard questions about control, audit, and security is the key to unlocking the true potential of the agents in the enterprise.

Shortcomings aside, it is an impressive demo of the art of possible. Your comments on hardening the agent and explicitly addressing ‘non-functional’ requirements are valid and perhaps the more general opportunity.

Paradoxically not becoming the exploit in course of reporting on one!