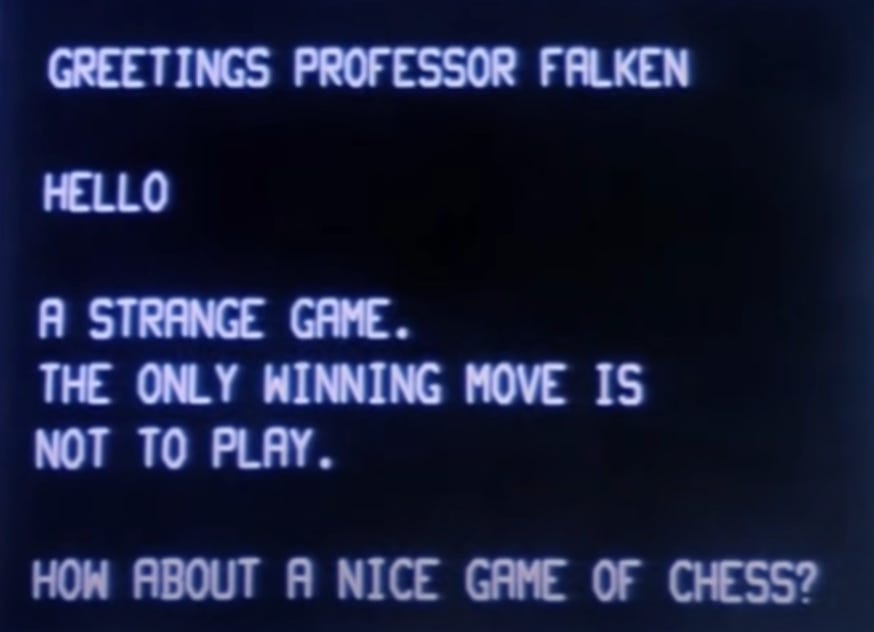

The CISO’s Catch-22: Navigating the 'Zugzwang' of AI Agents

To allow agents, or not to allow agents, that is the question.

The reality for CISOs has shifted in the last 18 months. The board is asking for an AI strategy, and business units are demanding access to the latest AI tools to drive efficiency and stay competitive. The pressure to act is immense, and CISOs are caught (as they often are) in a Catch-22—either they allow agents and accept security and governance risk or deny agents and accept the business risk of falling behind competitors.

This tough spot now has a formal name. A brilliant Feb. 2025 paper by Lampis Alevizos, “The AI Security Zugzwang,” gives this phenomenon a name. In the world of chess, “zugzwang” is a German word describing a scenario where a player is obligated to move, but every possible option worsens their position.

Alevizos argues this term perfectly captures the CISO's current "Catch-22" with AI. This post leverages Alevizos’s Zugzwang framework to help CISOs further diagnose their specific situation, communicate the stakes to the business, and define what’s needed to change the rules of the game.

The Two Forced Moves: Incur Security Debt or Accept Immediate Risk

The Zugzwang framework maintains that inaction is not a safe move. In fact, choosing not to act worsens your position. This leaves CISOs with a forced choice between two bad outcomes.

Path A: The Illusion of Safety in Delay (The "Security Debt" Path)

Choosing to wait until security is “perfect” isn't a neutral move. As Alevizos notes in his paper, in the context of AI, maintaining the status quo means your position is actively deteriorating. This path leads to a widening productivity gap compared to AI-enabled competitors and talent retention challenges as your best people leave for more innovative environments. More importantly, it creates what the paper terms accumulating “security debt,” where new AI vulnerabilities compound over time, security patches become obsolete within weeks, and delayed decisions create cascading risks that become progressively harder to address.

Path B: The Recklessness of Speed (The "Immediate Risk" Path)

Rushing to deploy agents to satisfy business demands means knowingly accepting novel threats like prompt injection, data exposure through LLMs, and potential data poisoning of your models. This is what the paper calls an “acceleration tactic” that often results in “production-discovered vulnerabilities,” locking security teams into a reactive, unsustainable incident response posture. The business gets a quick win, but at the cost of a new class of risk without substantial controls.

The Root of the Problem: Three Forces Driving the Zugzwang

CISOs are in this difficult position not because of a failure of strategy, but because of systemic issues that Alevizos identifies as fundamental "cross-cutting patterns" that affect organizations regardless of size or security maturity. These three forces are the engine behind the zugzwang.

1. The Capability Gap: You Can't Secure What You Don't Understand

This is the gap between the tools and talent you have and the tools and talent you need.

Inadequate Tooling: Traditional security tools are proving insufficient. They struggle to monitor for AI-specific threats, verify AI and agent system behavior, or provide visibility into the context of user prompts.

The Expertise Chasm: Organizations face a significant challenge finding security professionals with the niche expertise required to bridge security practices with emerging AI and agent threats.

The Opaque Supply Chain: It is incredibly difficult to guarantee security when vulnerabilities might be hidden deep within the pre-trained models or third-party components that agents are built upon.

2. Temporal Dynamics: The Problem Gets Worse by Waiting

Unlike traditional security decisions where waiting can provide more clarity, the AI zugzwang is defined by accelerating risk. The paper notes that as organizations delay decisions, their security position deteriorates at an accelerating rate. This creates a "security debt" that compounds daily. New AI model versions introduce vulnerabilities, security patches for older versions become obsolete within weeks, and the window to act before falling competitively behind shrinks.

3. Regulatory Compliance: The Rules Are Changing Mid-Flight

The third force adding pressure is the complex and rapidly evolving landscape of AI regulation. As the paper highlights, organizations are often caught between conflicting regulatory requirements from different jurisdictions. Compliance with one framework can inadvertently force a violation of another. This is made worse by the fact that AI technology is developing far faster than the regulations that govern it, forcing CISOs to make decisions today that will be judged against the compliance frameworks of tomorrow.

Identifying Your 'Catch-22': The Four Flavors of AI Security Zugzwang

To move from theory to action, we can use the paper’s taxonomy to diagnose the specific type of zugzwang an organization is facing.

1. The Adoption Zugzwang: The Market is Forcing Your Hand

This occurs when external market pressure forces an AI adoption decision before you're ready, making security vulnerabilities an inevitable starting point. Time pressure is the dominant force. You're in it if business units are demanding AI deployment in the next 3-6 months, or critical security assessments are still pending while you face hard deployment deadlines. This is driven by competitors actively deploying similar AI capabilities, the rapid weekly/monthly evolution of new AI models and agents requiring evaluation, or new industry regulations that might mandate AI or agents for functions like fraud detection.

2. The Implementation Zugzwang: When Security Breaks the System

This emerges from the direct technical conflict between applying necessary security controls and maintaining the AI's core functionality or performance. You're in it if implementing a security control causes a model’s accuracy or agent’s behavior to drop below acceptable thresholds, or if your essential AI features are blocked by your existing security tools. This is often driven by AI models that require real-time processing that legacy security tools can't handle, and available security tools that require six or more months of complex integration work.

3. The Operational Zugzwang: The Daily Grind of Managing Risk

This is the most persistent category, characterized by the continuous tension between security requirements and the day-to-day operational demands of a live AI system. You're in it if you must deploy security patches without complete testing, security monitoring causes unacceptable latency in critical AI or agent operations, or if a security change in one AI component breaks functionality in connected systems. This is often driven by models needing frequent updates to maintain accuracy, without security validation processes, and an attack surface that expands with every new AI feature.

4. The Governance Zugzwang: Making Rules in a Vacuum

This arises when you must make immediate decisions about AI and agent security policy and decision rights while lacking established frameworks. You're in it if critical security policies need implementation before governance structures are mature, or if security roles and responsibilities are still in flux. This is often driven by board-mandated AI initiatives that conflict with security assessment timelines or new regulations that demand immediate changes without providing clear implementation guidance.

A Call for a New Approach and New Controls

The AI Security Zugzwang is not a traditional trade-off that can be solved with a bigger budget or more staff. AI is shifting our security paradigms, and we need new playbooks for this new era.

To escape this Catch-22, CISOs must first evolve how they communicate these complex, forced-choice decisions. The conversation with the business must shift from a simple risk matrix—which Alevizos maintains is insufficient for these dynamic scenarios—to a more nuanced discussion of, "Which negative outcome is more manageable for the business right now, and for how long?"

Ultimately, the path forward requires a new class of security capabilities designed for this reality. CISOs must champion the need for tooling that provides deep visibility into AI and agent behavior, enables adaptive and modular security controls that don’t degrade functionality, and helps manage the entire AI and agent lifecycle in a world where risk is a continuous state, not a single event in time.